Motivation

NeRF and 3D Gaussian Splatting have achieved remarkable results, revolutionizing 3D scene representation and rendering. However, their requirement for many high-quality images limits their applicability. Many have focused on sparse view settings to mitigate this, where only a handful of views are available. Complex geometries and large-scale environments are particularly problematic in sparse settings, leading to artifacts such as floaters. Several works have introduced depth and structural priors to mitigate this, but these are hard to obtain in practice.

Method

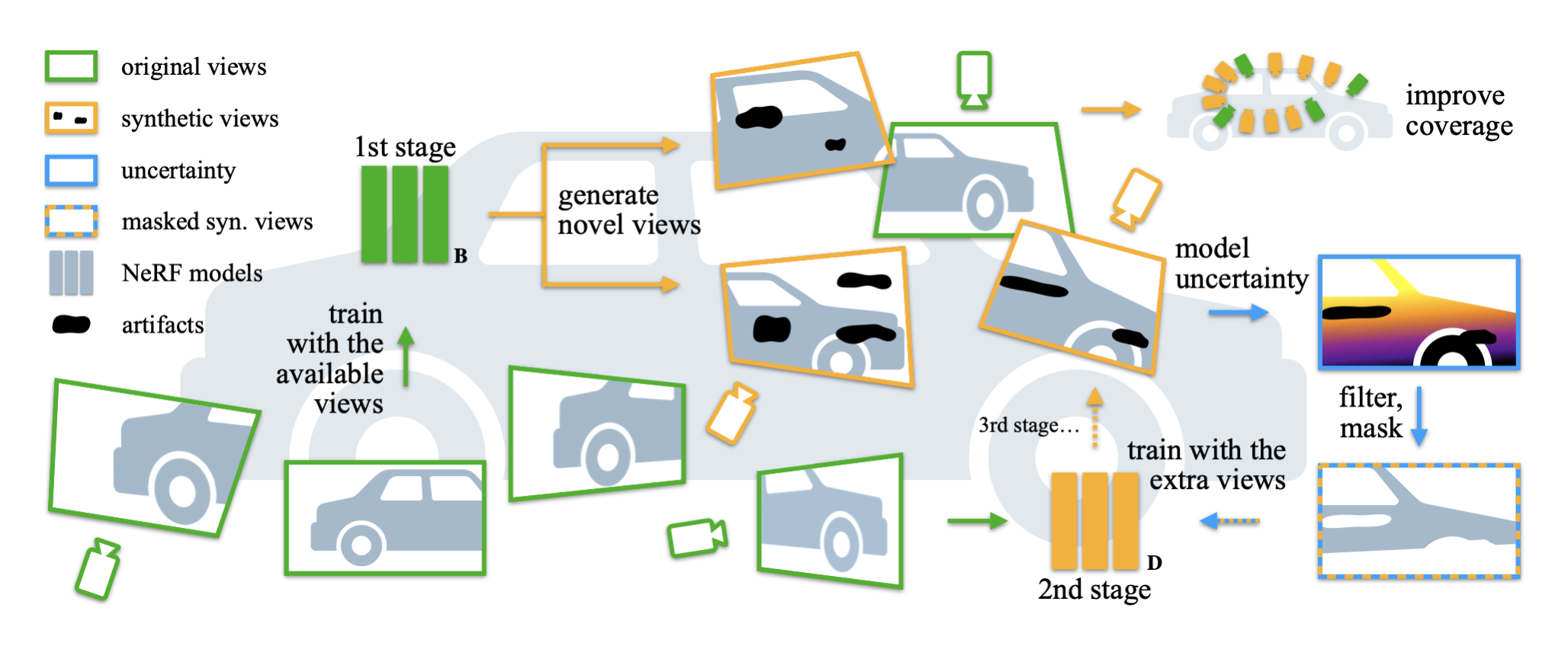

In this paper, we propose Re-Nerfing, a simple and effective method to improve novel view synthesis without using extra data, models, or information. Re-Nerfing is a flexible and iterative augmentation strategy for both NeRF-based and Gaussian Splatting-based methods. As shown in the figure above, first, we train a baseline model with the available views (green). Then, we use this model to synthesize novel views of the same scene or object to improve the view coverage (orange). Later, we add these synthesized views to the training data and use them to train a new model. This process can be repeated iteratively by using the new model to synthesize novel views. Specifically, for NeRF-based methods, we compute the model uncertainty (blue) and discard uncertain regions of the synthetic views to provide a higher-quality signal to the new model.

Results

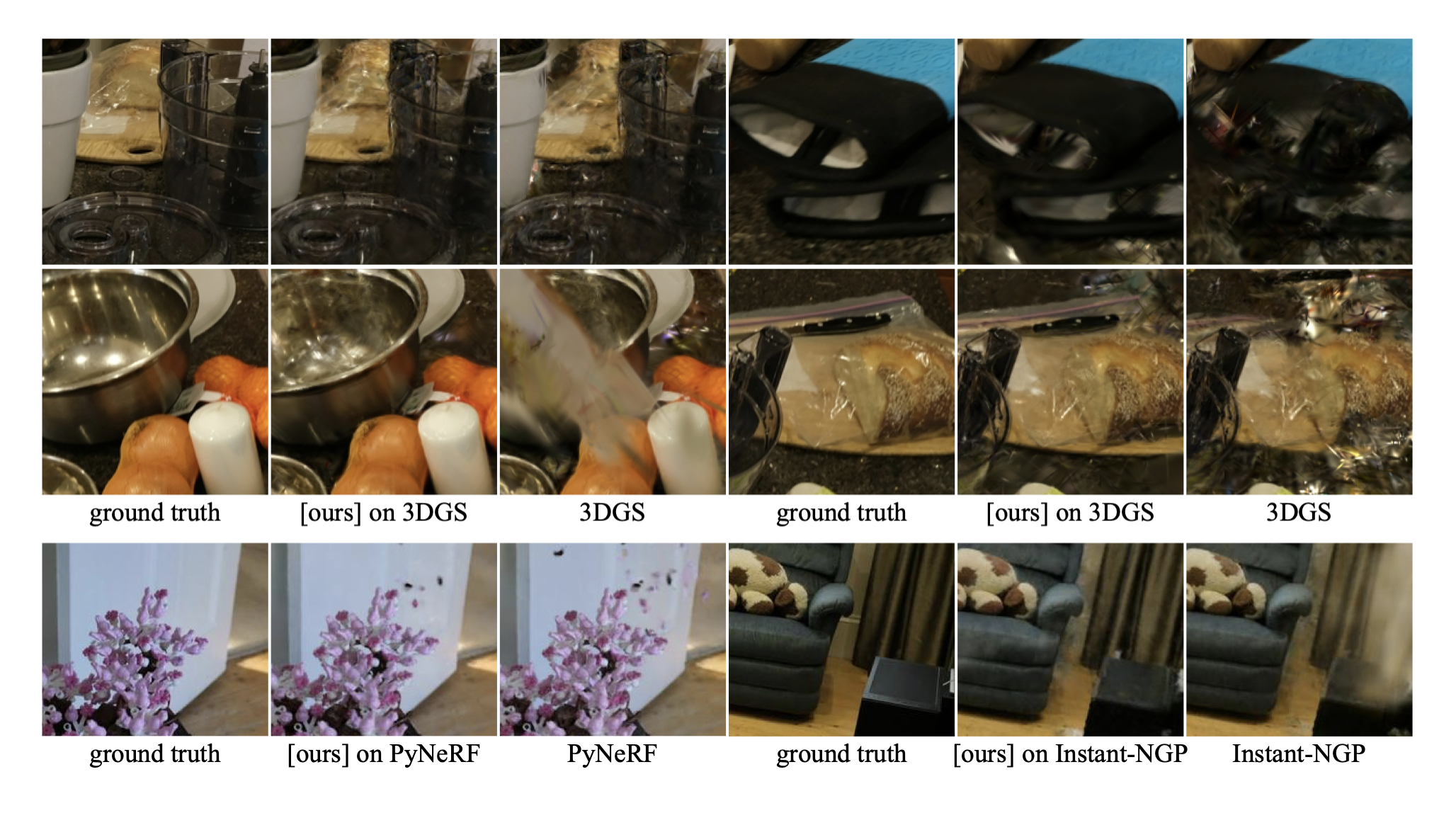

Re-Nerfing improves significantly upon various pipelines, such as 3D Gaussian Splatting (3DGS), PyNeRF, and Instant-NGP. By adding more views, albeit synthetic, the proposed method reduces artifacts and mitigates the shape-radiance ambiguity.